舞台裏MLラボ

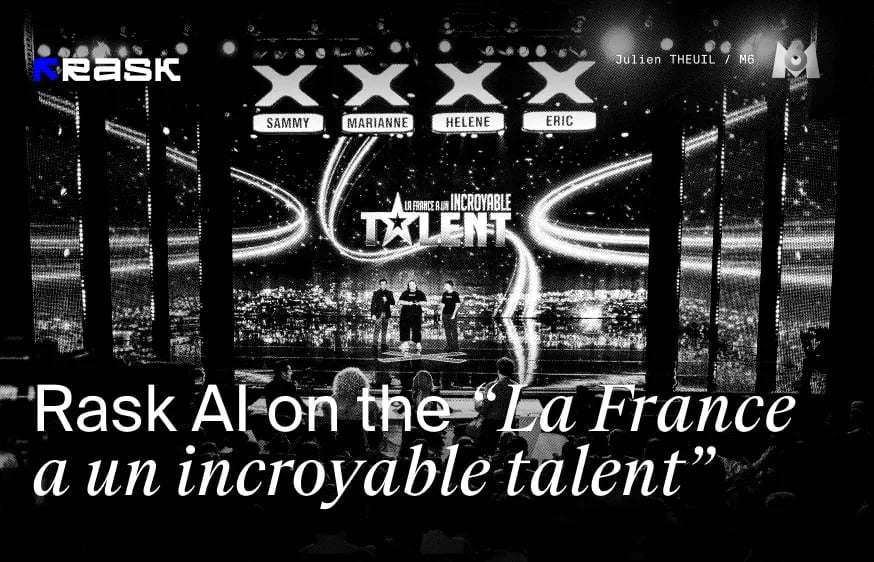

最新記事では、Rask AIのリップシンク技術のエキサイティングな世界に、同社の機械学習責任者ディマ・ヴィピライレンコの案内で飛び込みます。この革新的なAIツールがコンテンツ制作と配信にどのような波を起こしているのか、技術の中心地であるBrask MLラボの舞台裏をご紹介します。私たちのチームには、ワールドクラスのMLエンジニアやVFXシンセティックアーティストがおり、未来に適応するだけでなく、未来を創造しています。

このテクノロジーがクリエイティブ業界をどのように変革し、コストを削減し、クリエイターが世界中の視聴者にリーチできるよう支援しているのか、ぜひご参加ください。

リップシンク・テクノロジーとは?

ビデオのローカライズにおける主な課題の1つは、唇の不自然な動きです。リップシンク技術は、唇の動きを多言語音声トラックと効果的に同期させるために設計されています。

最新の記事で学んだように、リップシンクのテクニックは、ただタイミングを合わせるだけでなく、口の動きを正しくする必要があり、より複雑です。例えば、"O "は明らかに口の形が楕円になるので、"M "にはならない。

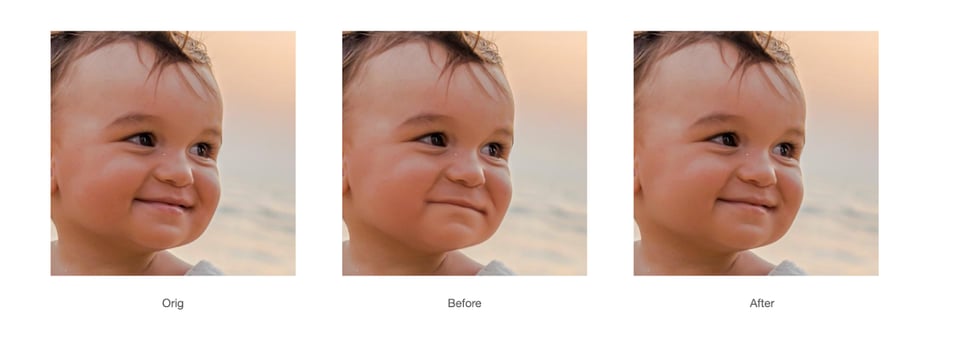

より高画質になったリップシンクの新モデルを紹介!

私たちのMLチームは、既存のリップシンクモデルを強化することを決定しました。この決断の背景にはどのような理由があったのでしょうか?また、ベータ版と比較して、このバージョンでは何が新しくなったのでしょうか?

モデルを強化するために、以下のような多大な努力が払われた:

- 精度の向上:AIアルゴリズムを改良し、話し言葉の音声的な詳細をよりよく分析し、一致させることで、複数の言語で音声と密接に同期した、より正確な唇の動きを実現しました。

- 自然さの 向上:より高度なモーションキャプチャデータを統合し、機械学習技術を洗練させることで、唇の動きの自然さを大幅に向上させ、キャラクターの発話をより流動的で生き生きとしたものにしました。

- スピードと効率の向上:品質を犠牲にすることなく、動画をより速く処理できるようモデルを最適化し、大規模なローカライズを必要とするプロジェクトの納期を短縮しました。

- ユーザーからのフィードバックの反映:ベータ版のユーザーからのフィードバックを積極的に収集し、その洞察を開発プロセスに反映させることで、特定の問題に対処し、全体的なユーザー満足度を向上させました。

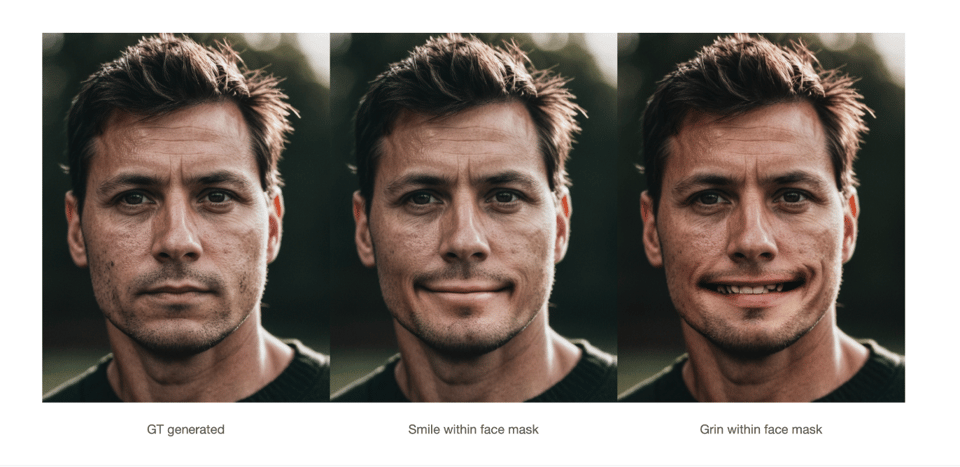

私たちのAIモデルは、具体的にどのように唇の動きと翻訳された音声を同期させているのでしょうか?

ディマ「私たちのAIモデルは、翻訳された音声の情報と、フレーム内の人物の顔の情報を組み合わせることによって動作し、最終的な出力にこれらを統合します。この統合により、唇の動きが翻訳された音声と正確に同期し、シームレスな視聴体験を提供します

プレミアム・リップシンクが高画質コンテンツに理想的なのは、どのようなユニークな特徴があるからですか?

ディマ「プレミアム・リップシンクは、マルチスピーカー機能や高解像度対応といった独自の機能により、高品質なコンテンツに対応できるよう特別に設計されています。最大2K解像度までの動画を処理することができ、妥協することなく映像品質を維持することができます。さらに、マルチスピーカー機能により、同一映像内の異なるスピーカー間で正確なリップシンクが可能なため、複数のキャラクターやスピーカーを含む複雑な制作物にも高い効果を発揮します。これらの機能により、Premium Lipsyncはプロ級のコンテンツを目指すクリエイターにとって最良の選択肢となります」。

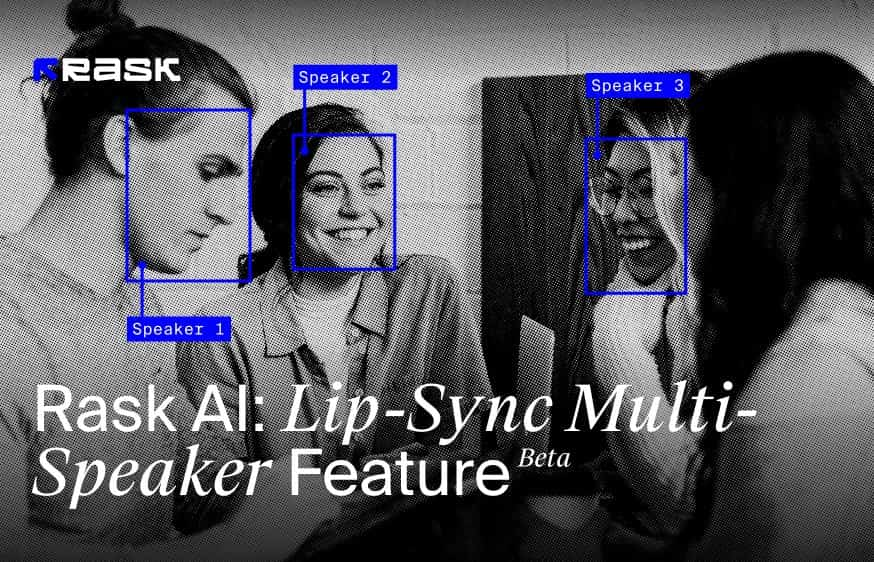

リップシンク・マルチスピーカー機能とは?

マルチスピーカー・リップシンク機能は、複数の人物が登場するビデオにおいて、唇の動きを話し手の音声と正確に同期させるように設計されています。この高度なテクノロジーは、1つのフレーム内の複数の顔を識別して区別し、各個人の唇の動きが話し言葉に従って正しくアニメーション化されるようにします。

マルチスピーカー・リップシンクの仕組み:

- フレーム内の顔認識: この機能は最初に、数に関係なくビデオフレームに存在するすべての顔を認識します。正確なリップシンクロに欠かせない、各個人の識別が可能です。

- オーディオ・マッチング: ビデオ再生中、このテクノロジーは、音声トラックを話している人物に特別に合わせます。この正確なマッチングプロセスにより、音声と唇の動きが確実に同期します。

- 唇の動きの同期: 話している人物が特定されると、リップシンク機能は話している人物の唇の動きだけを再描画します。フレーム内の話していない人の唇の動きは変更されず、映像全体を通して自然な状態を維持します。この同期は、アクティブな話し手だけに適用されるため、画面外の声やシーン内に複数の顔がある場合でも効果的です。

- 唇の静止画像への 対応: 興味深いことに、この技術は、唇の静止画像がビデオフレームに表示された場合、唇の動きを再描画するのに十分なほど洗練されており、その汎用性の高さを示しています。

このマルチスピーカー・リップシンク機能は、複数の話し手がいるシーンや複雑な映像設定において、音声に合わせて話し手の唇だけが動くようにすることで、臨場感を高め、視聴者の興味を引きます。このターゲット化されたアプローチは、アクティブなスピーカーへのフォーカスを維持し、ビデオ内のグループインタラクションの自然なダイナミクスを維持するのに役立ちます。

どの言語でも、たった1つの動画から、さまざまなオファーを多言語で紹介するパーソナライズされた動画を何百本も作成できます。この多様性は、マーケターが多様でグローバルな視聴者とエンゲージする方法に革命をもたらし、プロモーション・コンテンツのインパクトとリーチを強化します。

新しいプレミアム・リップシンクのクオリティと処理速度のバランスはどうですか?

ディマ「プレミアム・リップシンクで高品質と高速処理のバランスをとるのは難しいことですが、私たちはモデルの推論を最適化することで大きな進歩を遂げました。この最適化によって、可能な限り最高の品質を適切な速度で出力することができるようになりました」。

モデルのトレーニング中に遭遇した興味深い欠陥や驚きはありますか?

さらに、口周りの咬合を扱うことは非常に難しいことがわかっています。これらの要素は、私たちのリップシンク技術でリアルで正確な表現を達成するために、細部への注意深い配慮と洗練されたモデリングを必要とします。

MLチームは、ビデオ素材を処理する際、どのようにしてユーザーのデータのプライバシーと保護を確保しているのですか?

ディマ 私たちのMLチームは、ユーザーデータのプライバシーと保護に真剣に取り組んでいます。Lipsyncモデルでは、トレーニングに顧客データを使用しないため、個人情報が盗まれるリスクを排除しています。モデルのトレーニングには、適切なライセンスが提供されているオープンソースのデータのみに依存しています。さらに、モデルはユーザーごとに個別のインスタンスとして動作するため、最終的なビデオは特定のユーザーにのみ配信され、データのもつれを防ぎます。

私たちは、法的権利と倫理的透明性に重点を置き、コンテンツ制作におけるAIの責任ある利用を保証し、クリエイターのエンパワーメントに取り組んでいます。私たちは、あなたの動画、写真、声、肖像が明示的な許可なく使用されることがないことを保証し、あなたの個人データとクリエイティブ資産の保護を保証します。

当社は、The Coalition for Content Provenance and Authenticity (C2PA)およびThe Content Authenticity Initiativeのメンバーであり、デジタル時代におけるコンテンツの完全性と真正性への献身を反映しています。さらに、当社の創設者兼CEOであるMaria Chmirは、Women in AI Ethics™のディレクトリに掲載され、倫理的なAIの実践における当社のリーダーシップを強調しています。

リップシンク技術の発展にはどのような将来性がありますか?特に注目している分野はありますか?

ディマ 私たちのリップシンク技術は、デジタルアバターへのさらなる発展の土台になると信じています。映像制作コストをかけずに、誰もがコンテンツを制作し、ローカライズできる未来を描いています。

短期的には、今後2ヶ月の間に、モデルの性能と品質を向上させることをお約束します。私たちの目標は、4K動画でのスムーズな動作を保証し、アジアの言語に翻訳された動画での機能性を向上させることです。これらの進歩は、私たちの技術のアクセシビリティとユーザビリティを広げ、デジタルコンテンツ制作における革新的なアプリケーションへの道を開くことを目指す私たちにとって極めて重要です!強化されたリップシンク機能をお試しいただき、この機能についてのフィードバックをお寄せください。

よくあるご質問

リップシンクは、Creator Pro、Archive Pro、Business、Enterprise プランでご利用いただけます。

リップシンクが 1 分生成されると、合計分数残高から 1 分が差し引かれます。

リップシンクの分数は、ビデオをダビングするときと同様に差し引かれます。

リップシンクはダビングとは別に課金されます。例えば、1分間のビデオを1つの言語に翻訳してリップシンクするには、2分間必要です。

リップシンクを生成する前に、技術の品質を評価するために1分間無料でテストすることができます。

リップシンク生成の速度は、ビデオ内の話者の数、ビデオの時間、品質、サイズに依存します。

例えば、異なる動画に対するリップシンク生成速度の目安は以下の通りです:

スピーカー1人によるビデオ

- 4分のビデオ 1080p ≒ 29分

- 10分 1080p ≒ 2時間10分

- 10分の4Kビデオ≒8時間

3人のスピーカーによるビデオ:

- 10分間の1080p ≒ 5時間20分

- YouTube、Googleドライブからのリンクを介してビデオをアップロードするか、直接デバイスからファイルをアップロード.ターゲット言語を選択し、翻訳ボタンをクリックしてください。

- Dub video "ボタンを使って、Rask AIでビデオにナレーションを追加できます。

- 動画がリップシンクに対応しているかどうかを確認するには、「リップシンクチェック」ボタンをクリックします。

- 互換性があれば、リップシンクボタンをタップして進みます。

- 次に、ビデオに入れたい顔の数を "1 "か "2+"のどちらかで選択し、"Start Lip-sync "をタップします。注意:これは顔の数についてであり、スピーカーについてではありません。

Rask%20Lens%20A%20Recap%204.webp)